In this article, I will briefly introduce the ways of sharing GRPC Proto Buffers files across different microservices.

Background: The Problem of Proto Buffers File Sharing Between the Clients and Servers

Nowadays, more and more projects are using MicroService Structure. But after we broke our project data processing pipeline into microservice, it’s very important for us to choose a proper communication framework to better delivery data across different microservices. In the past, most people would choose REST API design due to the familiarity, large number of tools available and the maturity. However, there are many limitation around REST API Designs also, in order to decouple and isolate each microservice from others, we will need our API Interface to be as purity as possible. But due to the natural of the REST API design, its combination of verbs, headers, payloads, URL identifiers, resource URLs, it will be effect cost to maintain a simple, functional interface. Also, since the whole data processing pipeline were cut into different microservices, we will need to let each call to maintain lease latency. Since we will have a large number of internal request/response API calls. The GRPC over performed the REST API in connection time, transport time and encoding/decoding time. That’s why we will use GRPC to replace all REST API designs.

Unlike REST API where the client only needs to know the URL identifiers and the HTTP verbs to be able to call the API, when implementing the GRPC, a Proto Buffers file will be needed in order to make the RPC call. Proto Buffers is the IDL(Interface Description Language) GRPC uses, it will record the input and output message format including the types for each field as well as their encoding/decoding orders. The Proto Buffers is the main reason why GRPC is more popular among other RPCs. The Proto Buffers can act as both a documentation and versioning tool. Since the Proto Buffers is just a binary format, it’s forward and backward compatible.

Since the Proto Buffers file records both the input and the output message format and details, hence, if the client does not have the latest version of the Proto Buffers file, it might get empty values or even errors during making the GRPC call that service just updated. Thus, it’s important to us to maintainer a system that can easily sync the Proto Buffers files between the servers and clients.

Solutions to this problem:

There are lots of different practice that can solve this problem. I will list them below:

Using Git Submodule:

The first solution is straightforward: create a central git repository to store the Proto Buffers files. And every one just git clone this git project inside their own projects’ git as a git sub module.

This method solve the problem of sharing the Proto Buffers, however, having a git project inside another one sometimes will be hard to manger. But the problem is, not all microservice need to access all Proto Buffers files, a microservice might just need those Proto Buffers what satisfy its need. And when using the Proto Buffers git repository, it will be difficult to only notify those microservices whose Proto Buffers were updated on the server side.

Using GRPC Server Reflection Protocol:

The GPRC server reflection is a great tool to assist clients in runtime construction of requests without having stub information precompiled into the client. However, the supports of the GRPC Server Reflection Protocol is limited to certain languages. Currently, Node is still not supported. More information can be found here: GRPC Server Reflection Protocol.

Using A Private Package Repository:

The last method I’m going to introduce here is to publish the GRPC stub files(Generated Codes) over a private package repository. I think this is the best option when the GRPC Server Reflection Protocol is not available. It will allow the server side to choose which version and what GRPC Stub files to publish. In this way, we can control both the scale and version we want our client microservices to be able to have. And it will also be easy for the client side to compare and update different versions of the GRPC stub we released as server side.

Implementation: Using Sonatype Nexus 3 Repository to Publish and Managing GRPC Stub Files

We will use Sonatype Nexus to manage our private package repository.

Install Sonatype Nexus 3:

Installing Nexus3 is very simple. Here is the official guide to it. I will just use docker to install it.

Run the below command to install the docker version of Nexus3:

docker run -d -p 8081:8081 -p 8082:8082 -p 8083:8083 --name my-nexus sonatype/nexus3:latest

After install Nexus 3. You can get the default password with the below command:

docker exec -it <container_id> cat /nexus-data/admin.password

Set Up the Repository:

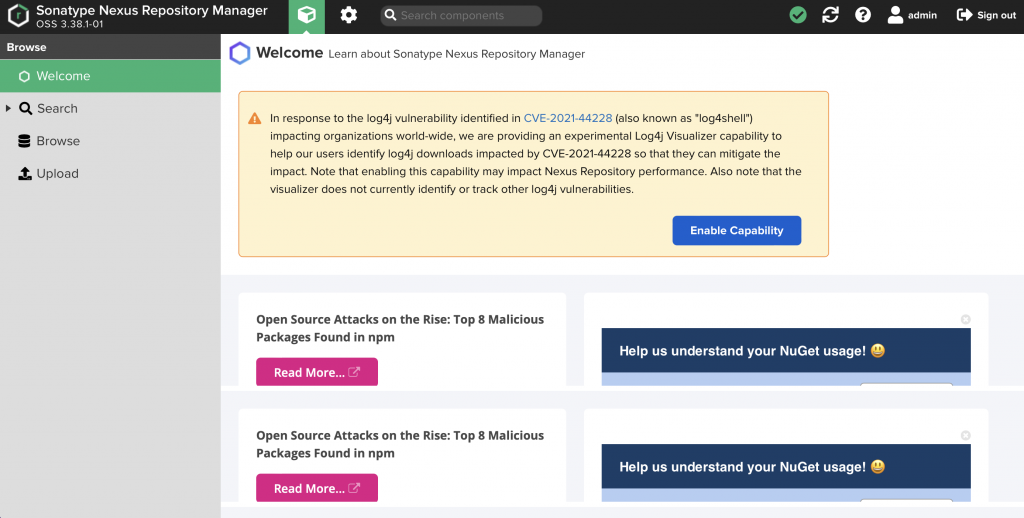

First, we will just need to login into the Nexus3. The default Nexus 3 port is 8081. If you are install Nexus 3 on the localhost you should be able to log in here: http://127.0.0.1:8081 with the login credential.

After log in, you should be able to see the below content:

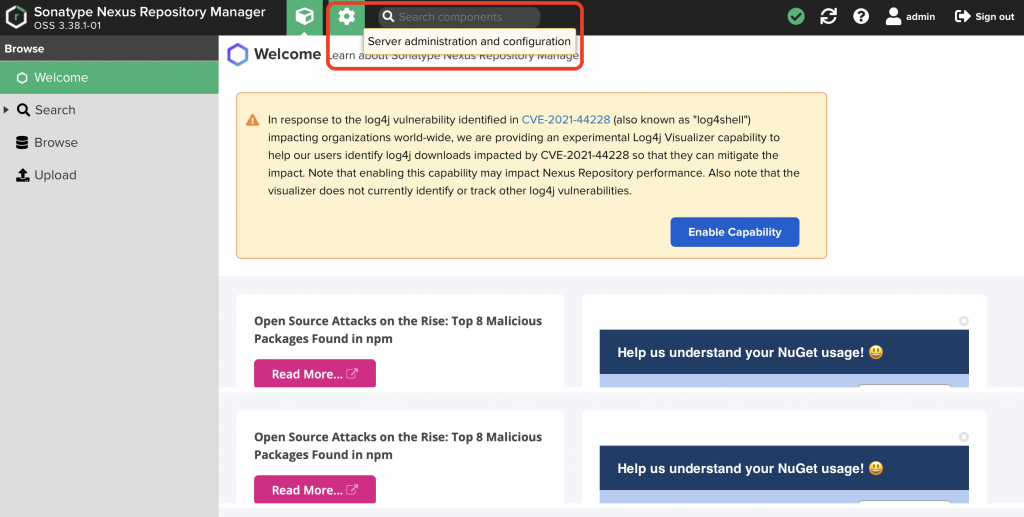

Click “Server Administration and configuration”

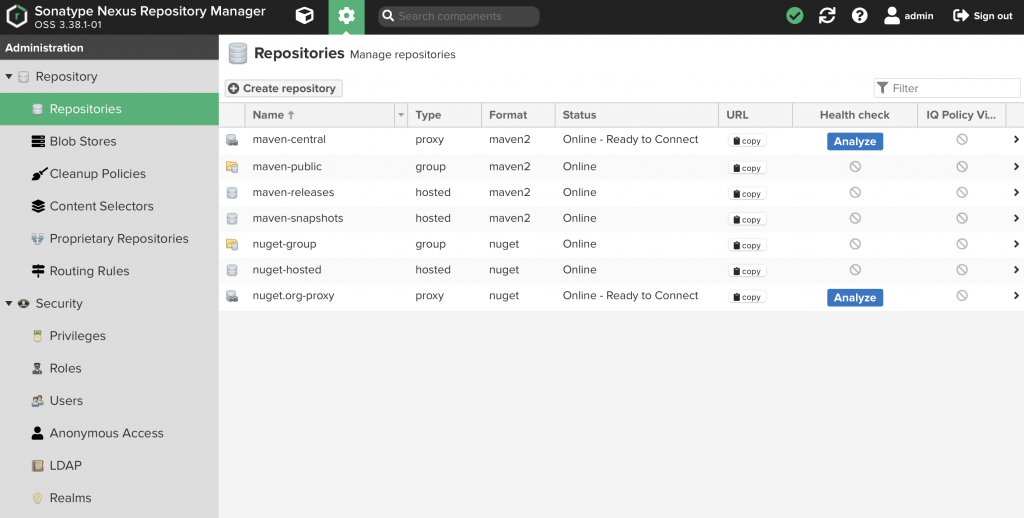

Choose repository to see all repositories:

In this example, I will create a PyPI Package Repository, Click Create New Repository:

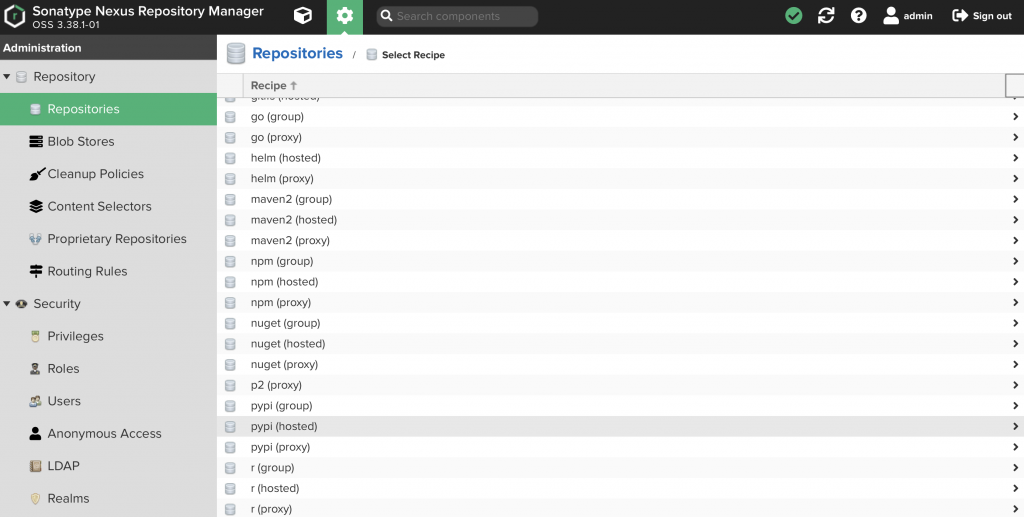

Make sure the (Hosted) version is selected:

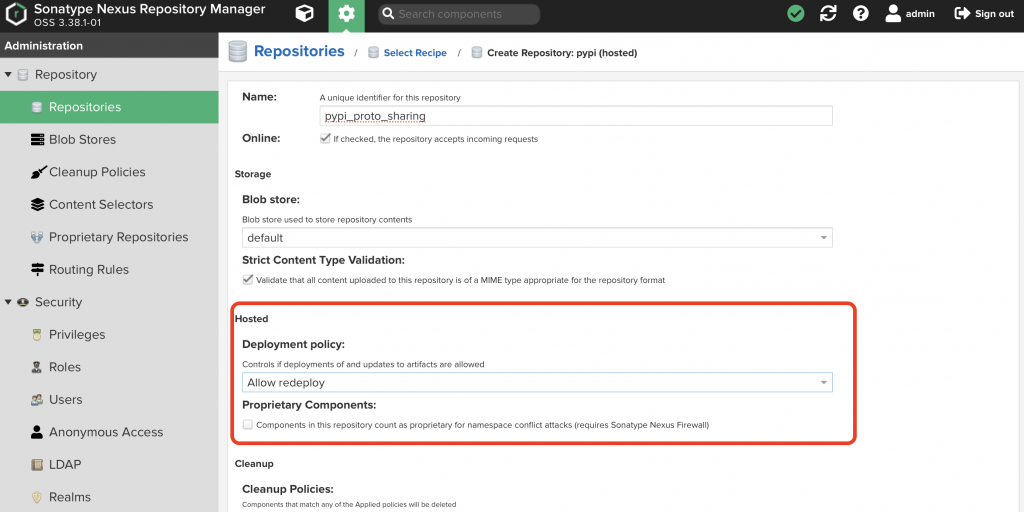

Make sure the redeployment policy is: Allow Redeploy, otherwise, you cannot push newer version to it:

Generating the Proto Stub Files:

To genenrate proto stub files, proton will be needed. I wouldn’t explain the details about setting up protoc here, since there the official guide is clear. please refer to Google’s Official Guide about Building Stub Files: Python Generated Code | GRPC

The is universal command for protoc to generated proto code(Python):

protoc --proto_path=src --python_out=build/gen src/foo.proto src/bar/baz.proto

To upload my stub file to the Nexus3, I will use twine here:

First, setup twine with the .pypirc file and place it under %HOME%

[distutils] index-servers = pypi [pypi] repository: http://127.0.0.1:8081/repository/pypi_proto_sharing/ username: admin password: password_you_have

A setup.py will be needed:

from setuptools import setup, find_packages

setup(

name='example',

version='0.61',

license='MIT',

author="Zichen Zheng",

author_email='me@155.94.241.37

packages=find_packages('src'),

package_dir={'': 'src'},

url='155.94.241.37',

keywords='ProtoFiles',

install_requires=[

],

)

Then run the setup.py to generate the dist folder:

python setup.py sdist

After the dist Folder is generated, use twine to upload the packages

twine upload -r pypi dist/* --verbose

Using the Package

To use the package, we will need to set up pip configurations.

On Windows, use RUN, go to %AppData%,

On Mac, see the below possible location, if None, just create one

$HOME/Library/Application Support/pip/pip.conf $HOME/.pip/pip.conf $HOME/.config/pip/pip.conf

Under the pip folder, create pip.ini:

[global] trusted-host=127.0.0.1:8081 index = http://127.0.0.1:8081/repository/pypi_proto_sharing/pypi index-url = http://127.0.0.1:8081/repository/pypi_proto_sharing/simple no-cache-dir = false

Then you can use pip to download the package.

Conclusion

By using a private package repository, we can easily manage the proper Proto Buffers Files for the desired Microservice and easily enabling versioning. In the article, I only show the example with PyPi, Nexus 3 also works with npm and many other package repositories for different languages. It should solve the problem of sharing Proto Buffers across difference MicroServices.